distinguished lecture series presents

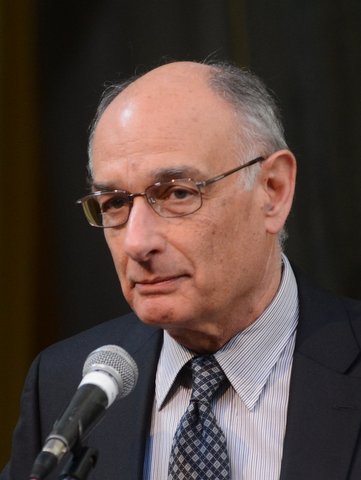

Donald Goldfarb

Columbia University

Research Area

Mathematical optimization and numerical analysis

Visit

Tuesday, May 17, 2016 to Thursday, May 19, 2016

Location

MS 6627

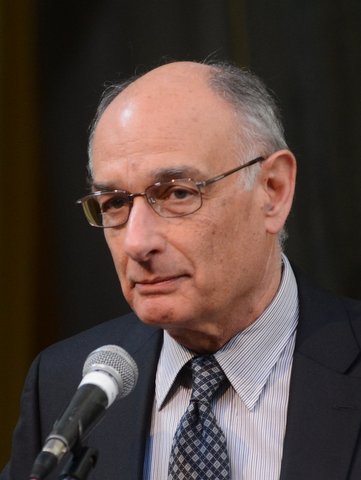

distinguished lecture series presents

Mathematical optimization and numerical analysis

Tuesday, May 17, 2016 to Thursday, May 19, 2016

MS 6627

Akshay Venkatesh

Akshay Venkatesh